[Dissertation] Robust Event-based Angular Velocity Estimation in Dynamic Environments

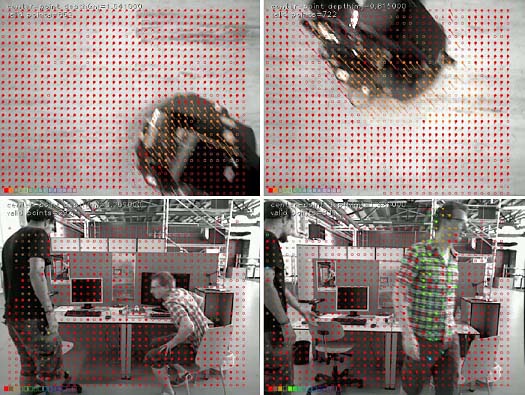

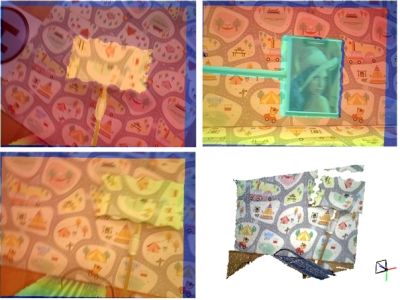

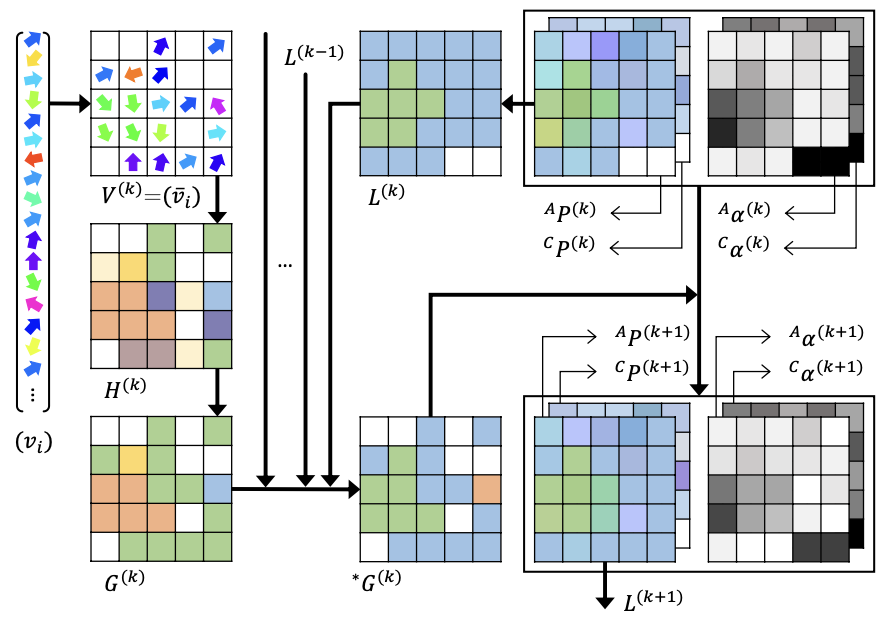

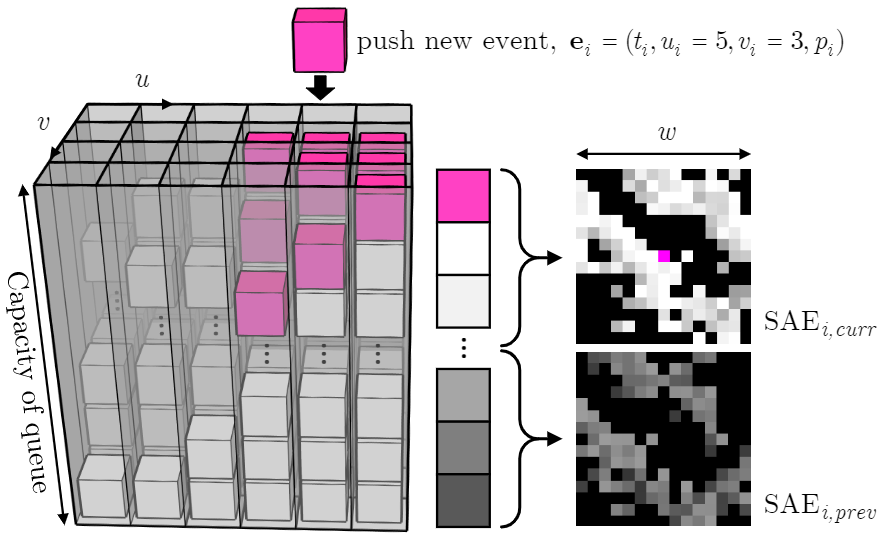

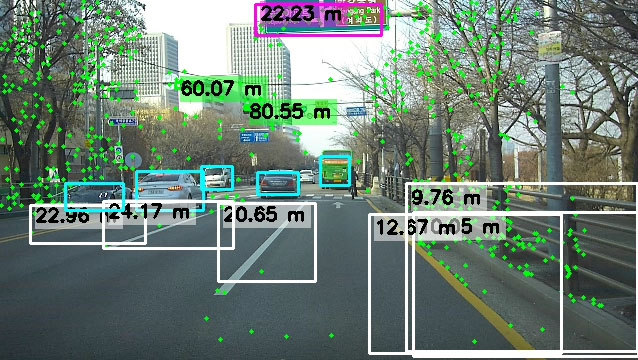

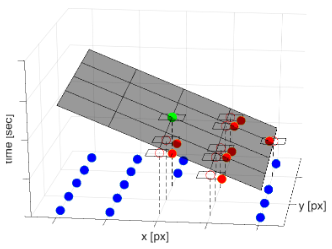

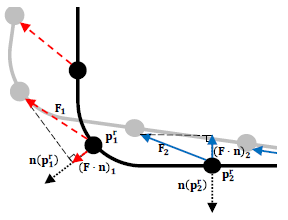

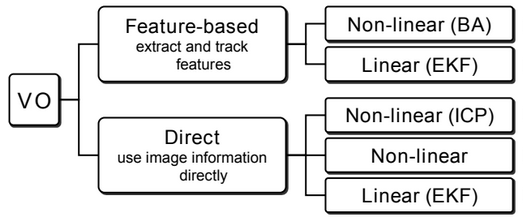

Abstract: This dissertation addresses the problem of estimating the angular velocity of the event camera with robustness to a dynamic environment where moving objects exist. These vision-based navigation problems have been mainly dealt with in frame-based cameras. The traditional frame-based came...